Be Prompt With It

We often hyperfixate on how AI attempts to understand us when when communicate with it, and the resulting uncanny valley therein - but what about the other way around?

When we leverage powerful LLMs, especially given the seamless user experience they strive for, it’s easy to fall into the trap of thinking that AIs simply come ready to talk. As if they're informed by a higher power, as if they will just magically "know" what you mean - and give you the output you want. But as it turns out, even the most powerful AI requires highly specific directions if you want effective results, making the process of crafting a prompt its own unique art! This is where prompt engineering comes into play, which is essentially the craft of AI-whispering! This isn't quite about "spelling everything out" for the machine, but more about ensuring it can be spoken to at all. After all, when you think about it, when you initiate a conversation with a Chatbot - the entity you're communicating with is speaking with organic life for the very first time, for all it knows. By speaking its language, we are empowered to harness its true potential.

Conversational Commandments

We are accustomed to language as a fluid, often nuanced, tool for human interaction. We use politeness, implied meaning, and conversational tangents to navigate social situations and establish rapport. However, when we step into the realm of prompt engineering - we must throw that whole contrived approach to communication out the window! While designed to mimic human conversation, an LLM, at its core, is a computational machine. It does not interpret vague intentions or polite requests; it processes precise instructions. Therefore, our natural inclination to ease into a request with phrases like "Could you maybe..." or "I was just wondering if you could..." is not only superfluous, but actively contributes to "noise" in the prompt - potentially obscuring our intended meaning. In prompt engineering, efficiency and precision take precedence.

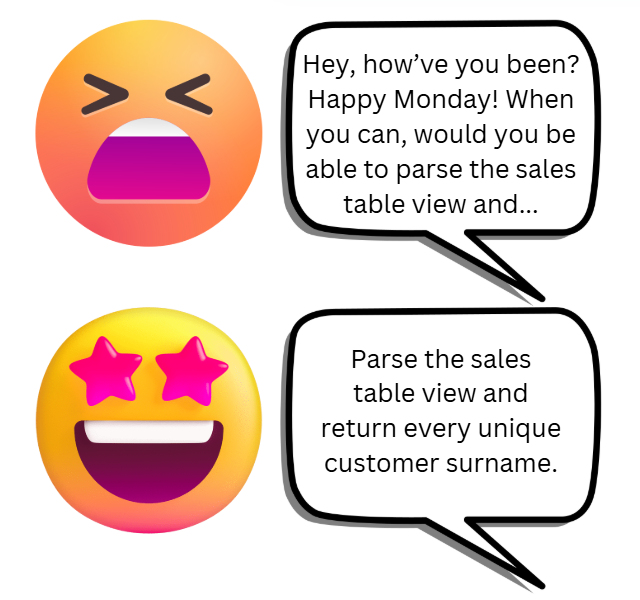

Indeed, politeness, the essential lubricant of human discourse, is just about the rudest thing you could do to an AI. We speak in conditionals and subjunctives as to show each other consideration and respect - well, the hard drive says cut the crap! Consider a prompt phrased as: "It would be great if you could write a short summary of this article, but not like it's an emergency or anything - if it's not too much trouble." The LLM will, God-willing, hopefully still be able to fulfill the core request - a summary - but the qualifying language, meant to be considerate, has rendered the machine just about as exasperated as circuitry can get. Phrases like "when you can" or "if it's not too much trouble" don't actually communicate any substance, merely platitudes - which the machine couldn't care less about. It has no emotional stake in the work it has to perform. By stripping away these polite niceties and opting for more direct language, we achieve more immediate, less ambiguous outcomes. For instance, simply asking "Summarize this article concisely" gives the model a clear and actionable direction. You've actually been polite to the machine when as concise as possible.

The Bionic Syntax

Beyond simplifying our language, we must understand the importance of structuring our prompts intentionally. While a well-written command is more efficient than a vague one, a command alone is usually not sufficient for more sophisticated requests. Think of your prompts as the framework for what you wish to generate. Much like the different components of a house - the foundation, the walls, the roof - the components of a good prompt can lead to very different outputs based on what parts they include. Consider the bare-minimum framework: explicit instruction, relevant context, and a target for the output. But it can go further. Delimiters, whether quotation marks or backticks for code blocks, create logical containers that focus the AI. Formatting guides for how you want your response styled help tailor responses to your need. And stylistic parameters, such as specifying tone of voice, further improve the AI's clarity on your specific needs. Therefore, the preparatory phrase is actually not about saying what you want, but about how you want it - conveyed with as much brevity and specificity as possible. Hemingway would've loved prompt engineering.

The idea of the "minimum viable prompt" is therefore paramount. Much like the concept of a minimum viable product in software development, a "minimum viable prompt" is the theoretically briefest yet most detailed prompt possible for a given task. For many who interact with LLMs for relatively simplistic tasks, this could be something as basic as: "Summarize the following article: [article text]," or, "Translate this sentence to French: [English text]." Now, this bare framework may not be quite descriptive, and far from "perfect," but it is meant to act as a foundation for more sophisticated, and advanced methods of interacting with AI later down the road. Aim for brevity, specify as much as you must along the way. While a poorly framed prompt is inherently inefficient, specificity must be considered holistically throughout the operation, rather than drilled to the bone in every step - lest you either counterproductively increase your own latency, or give your poor machine a stroke. Being aware of how to structure a bare-minimum-viable prompt gives a good starting position for the casual user. However, as we'll see, there are ways to make this better, and get even more accurate results.

Effective prompting relies not only on well-defined language but also on the correct utilization of keywords. These are the specific terms that focus the AI's attention on core elements of your prompt. Merely stuffing a prompt with keywords is counter-productive - much like jam-packing an otherwise well-prepared dish with a distinct spice, too many key words can overwhelm and obfuscate the essential aspects. Instead, we must view each word as carrying an "intent signal," guiding the AI’s processing path. Using the correct amount of certain keywords allows more targeted answers, especially on complex requests. The analysis of prompts therefore goes beyond grammar and semantics, demanding an understanding of what keywords are useful and how frequently you should employ them to get a clear direction from your bot. A well-crafted prompt therefore uses keywords strategically - selecting those with the most impactful signal and deploying them at appropriate densities, in order to generate the highest caliber output.

🤖 Aye-Aye Captain

Conversation with AI must inherently take a prescriptive approach from the user side to yield the best exchange. It's no longer enough to simply ask the AI - we need to be assertive, with precision and control, in order to allow it to shine in its job. The art of prompting is an iterative process, and once we establish our foundation we can look at methods to supercharge results by manipulating how the AI itself structures the thinking. One of the most powerful techniques for refining an LLM's output is leveraging the concept of role-playing - assigning a specific persona to the AI before posing the main prompt. We're effectively assigning a persona for the model to assume, one that determines the tone and focus of its response.

Imagine the difference between asking the chatbot a generic question about astrophysics, versus prefacing that same question with, "You are a world-renowned astrophysicist. Explain quantum entanglement." The latter prompt, by establishing a professional authority, has offered the machine a wealth of invaluable context in a mere sentence - and thus will render more precise and articulate answers. You can utilize this method to effectively make it more skilled in certain disciplines: a literary persona can make the chatbot generate highly specific and intricate prose, or a professional scientist persona can yield very high caliber, accurate explanations for STEM subjects. Beyond style, role-playing also affects the model's very approach to cognition. An LLM acting as a financial advisor might prioritize different details compared to one pretending to be a marketing consultant. Think of this persona establishment as "pre-programming" the AI, building a specialized mindset to focus on. It's about guiding the model toward its most relevant processing pathways.

Further adding onto the methods of establishing how it thinks - rather than just what to think - we examine the methods of chain-of-thought prompting. When given a complex problem, most AI models can't solve it because it approaches all elements as one single task. Chain-of-thought prompting guides the AI through the process by splitting down any problem into smaller and more manageable pieces. For example, you might instruct an LLM: "First, create a detailed outline of the argument. Next, expand upon each of these outline points into full paragraphs. Finally, assemble them into a well-structured essay." Instead of handing it a "write this whole essay" prompt and hoping for the best, we're breaking down a challenging task into easily digestible steps, giving it time to think methodically about the details of the operation, and generate better outcomes. Using the "thought process" for chain-of-thought will lead to outputs that far surpass the capacity of many basic prompts. This structured step-by-step process not only yields more accurate answers but also allows us to trace the AI's reasoning steps. This explains why major LLM's are increasingly investing in more advanced multi-step chain of though Chatbots, such as OpenAI's o1 which has taken the AI world by storm since fall 2024. Guiding the AI's decision making and analyzing the processes behind it are equally important. When you understand how a model comes up with a given answer, you’ll better understand how to modify your own techniques to better extract from your prompts. The core message is: if you want the best result, break down large tasks into smaller components.

While mastering this form of AI-whispering unlocks a world of possibility, it’s crucial to acknowledge the ethical dimension of our work. Prompt engineering, like any technology, is neither inherently good nor bad - its power is contingent on how we choose to use it. The responsibility falls on us, the users of these technologies, to ensure that we employ our prompt-crafting skills for beneficial, ethical, and thoughtful purposes. For more information of prompt engineering, and the workings of Generative AI in general, check out our class offering on Generative AI!

| Cobi Tadros is a Business Analyst & Azure Certified Administrator with The Training Boss. Cobi possesses his Masters in Business Administration from the University of Central Florida, and his Bachelors in Music from the New England Conservatory of Music. Cobi is certified on Microsoft Power BI and Microsoft SQL Server, with ongoing training on Python and cloud database tools. Cobi is also a passionate, professionally-trained opera singer, and occasionally engages in musical events with the local Orlando community. His passion for writing and the humanities brings an artistic flair with him to all his work! |

Tags:

- AI (4)

- ASP.NET Core (3)

- Azure (13)

- Conference (3)

- Consulting (2)

- cookies (1)

- CreateStudio (5)

- creative (1)

- CRMs (4)

- Data Analytics (3)

- Databricks (1)

- Event (1)

- Fun (2)

- GenerativeAI (4)

- Github (1)

- Markup (1)

- Microsoft (13)

- Microsoft Fabric (2)

- NextJS (1)

- Proven Alliance (1)

- Python (6)

- Sales (5)

- Sitefinity (13)

- Snowflake (1)

- Social Networking (1)

- SQL (2)

- Teams (1)

- Training (2)

- Word Press (1)

- Znode (1)

Playlist for Sitefinity on YouTube

Playlist for Microsoft Fabric on YouTube

Playlist for AI on YouTube

Copyright © 2025 The Training Boss LLC

Developed with Sitefinity 15.3.8500 on ASP.NET 9